Lessons from Visualization Research

Crowdsourcing via Amazon’s Mechanical Turk is a viable way to user-test visualizations

The study sought to prove the viability of Turk as a platform for “graphical perception experiments.” The study replicated the findings of prior, non-crowdsourced experiments, concluding that Turk is a viable option with many benefits.

Crowdsourcing a user-test is defined as “web workers completing one or more small tasks, often for micro-payments of $.01 to $.10 per task.” Benefits to using a crowdsourcing program such as Turk for user-testing, include cost and time benefits for the client; as well as larger sample size and more diversity among testers.

A few comments regarding the quality of answers received:

- The study found no direct relationship between an increase in payment and an increase in the quality of an individual’s answer.

- The quality of Turk responders was high, and the study had a rejection rate < 1% of outlier responses.

- The study suggests including a pre-qualification task before the actual task in order to ensure the user understands the subsequent task, and to include verifiable answers as past of the task to avoid wildly incorrect answers or “gaming” by the user.

Source: Crowdsourcing Graphical Perception: Using Mechanical Turk to Assess Visualization Data, Jeffrey Heer, Michael Bostock. ACM Human Factors in Computing Systems (CHI), 203–212, 2010

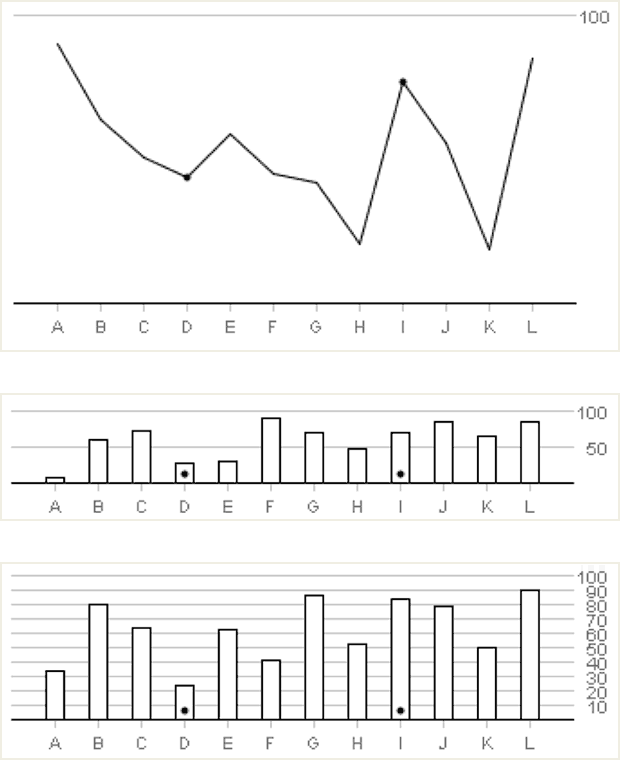

Experiment varying chart type, chart height, and gridline spacing.